Let's get straight to it. Formative evaluation is like a chef tasting the soup while it's still cooking. It’s not the final judgment. It's a quick check-in to see if it needs more salt, less spice, or maybe just a little more time on the stove.

In the world of instructional design and eLearning, this means gathering feedback during the learning process to make real-time adjustments. It’s all about improving the course as you go, often using modern tools like an LMS or authoring software like the Articulate Suite to facilitate this feedback loop.

Instead of waiting until the final exam to find out if learners actually "got it," formative evaluation uses small, low-stakes activities all along the way. Think of it as a continuous conversation between your course content and your learner, a key principle in modern instructional design.

This back-and-forth dialogue gives you, the instructional designer, the insights you need to tweak your approach. At the same time, it helps learners pinpoint exactly where they’re struggling and where they need to focus.

The concept came about as a way to do more than just measure a final outcome. The whole point is to improve the learning process itself, not just deliver a final verdict. That’s why these check-ins are usually low-stakes or ungraded, focusing on quality feedback that genuinely guides improvement. You can dig into the full history of formative assessment on Wikipedia if you're curious.

This constant feedback loop is what makes it so powerful for any modern instructional designer.

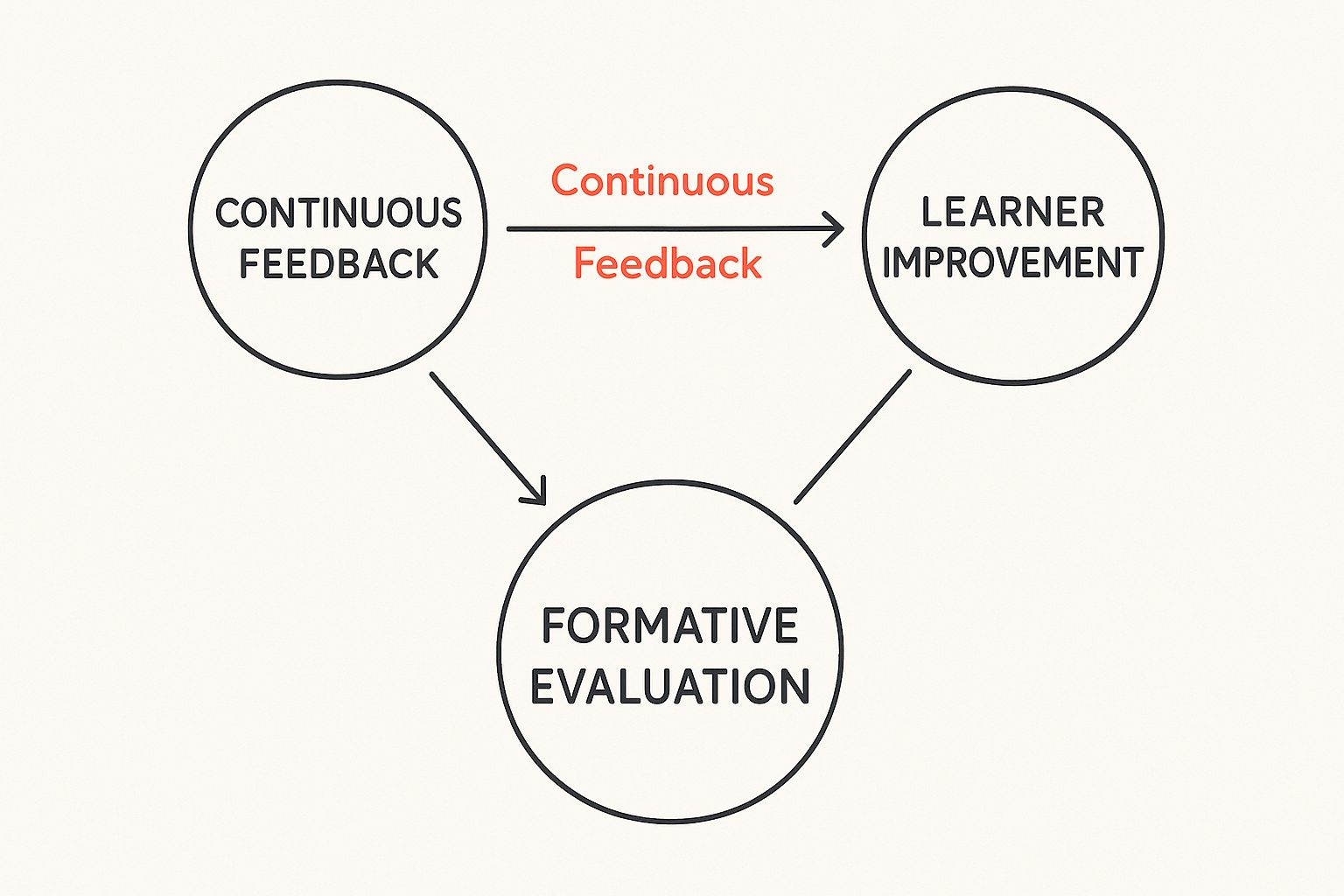

This is what that positive cycle of feedback and improvement looks like in practice.

As you can see, the whole idea is to use that continuous stream of feedback to drive real, meaningful improvement for the learner. It's a proactive approach, not a reactive one.

Now, let's contrast this "in-progress check" with its more famous counterpart: the final exam.

To really get a handle on formative evaluation, it helps to see it side-by-side with summative evaluation. This table breaks down the key differences, helping you see their distinct roles in the learning journey.

So, one is for shaping and refining the learning experience, while the other is for measuring the final result. Both are valuable, but they serve completely different purposes.

So, what makes this "tasting the soup" method so powerful for learning? It’s all about shifting the dynamic. Instead of a high-stakes, one-and-done final exam, learning becomes a supportive, ongoing conversation.

This simple change reframes the core question from "Did you pass?" to "Are you getting this?"—and that makes all the difference in the world.

This back-and-forth creates a feedback loop that truly empowers learners. They aren't just being judged at the end; they're getting guidance all along the way. This builds their confidence and encourages them to actually own their progress, knowing it’s okay to stumble because that’s part of the process.

And this isn't just a gut feeling; the data backs it up. A major analysis of classroom interventions found that students who got consistent formative feedback saw their test scores jump by an average of 20%. It’s clear proof that these ongoing check-ins lead to much better results. You can dig deeper into how formative evaluations boost performance if you're curious.

This kind of immediate insight is gold for instructional designers, letting us build learning paths that actually adapt to the person on the other side of the screen.

Think of formative evaluation as your early warning system. By sprinkling quick checks throughout your eLearning course—maybe a short poll after a microlearning video or a quick drag-and-drop built in a tool like Articulate Suite—you can spot where people are getting stuck, right away.

This proactive approach stops tiny misunderstandings from snowballing into huge learning roadblocks. It lets you step in at just the right moment, making sure everyone builds on a solid foundation of understanding.

Let's say a learner is fumbling through a key step in a software simulation. An automated check-in, easily managed through an LMS or LXP, could instantly flag their struggle. Then, it could automatically serve up a quick refresher video or a different example to clarify the point. This nips frustration in the bud and keeps them moving forward.

By tackling these issues in real-time, you're not just making the learning experience more efficient. You're creating something that responds directly to your learners' needs, which leads to way better engagement and knowledge that actually sticks. This is the very heart of what formative evaluation is all about.

Alright, let's get down to brass tacks. Moving from theory to action is where formative evaluation really comes to life. Implementing these checks in your eLearning courses isn't about tearing everything down and starting over. It’s simply about being deliberate in creating moments for feedback throughout the learning process. These are your "chef tasting the soup" moments.

The whole point is to collect useful insights without derailing the learning experience. You want to weave in checkpoints that feel like a natural part of the course, not like a pop quiz. This could be as simple as a quick poll after a video or as involved as a full-blown branching scenario built with a tool like Adobe Captivate.

This kind of thinking is at the heart of good instructional design. These feedback loops fit perfectly into the "Evaluation" phase of well-known frameworks. In fact, you can read more about the ADDIE model for training to see exactly how this connects to the bigger picture of course development.

So, what does this look like in practice? Here are a few hands-on techniques you can build directly into your courses with common authoring tools like Articulate Suite or Adobe Captivate. These aren’t about memorization; they’re about checking for real understanding.

Quick Polls: Just finished a video on a core concept? Throw in a single-question poll. Something like, "Which of these is the most critical safety step?" It gives you an immediate pulse check on how well the group is grasping the material.

Drag-and-Drop Activities: Need to see if learners understand a process? A simple drag-and-drop works wonders. Have them sequence steps, match terms with definitions, or sort items into categories. It’s an active way to see if they can actually apply the knowledge.

Confidence Checks: A simple slider asking, "How confident are you in applying this technique?" can be incredibly revealing. The results can flag topics that need to be revisited, even if learners are getting the answers right on knowledge checks.

These activities are low-stakes, providing instant, helpful data for both the learner and the instructional designer.

When you're dealing with more complex skills, branching scenarios are one of the best formative tools in your arsenal. You place learners in realistic situations and let them make decisions, which allows you to see their critical thinking skills in action within a safe space.

The magic of branching scenarios is the immediate, relevant feedback. If a learner makes a wrong turn, the scenario doesn't just say "incorrect." It shows the consequences and guides them back to the right path, reinforcing the lesson when it matters most.

These aren't just fancy quizzes. They are practice fields for the real world. This type of hands-on application is what makes learning stick, and it’s a perfect example of what what formative evaluation is all about.

Not too long ago, implementing formative evaluation was a grind. It was a manual, time-consuming slog of collecting feedback and slowly piecing it together. Thankfully, technology has completely changed the game, acting as a massive accelerator for instructional designers. It bridges the gap between evaluation theory and the software we use every single day.

Today's tools automate that critical feedback loop, making it almost effortless to gather real-time data. Instead of waiting days to review quiz responses or survey answers, you get instant insights. This speed lets you make adjustments on the fly, turning a static course into a living, breathing learning environment.

This ability to collect data and act on it right now is the whole point of formative evaluation—it’s all about continuous improvement driven by timely feedback.

Chances are, the learning platforms you already use are built with formative evaluation in mind. They give you the tools to sprinkle feedback opportunities directly into the course, making the process feel natural for both you and your learners.

LMS and LXP Platforms: Think of your Learning Management System (LMS) or Learning Experience Platform (LXP) as your command center. They’re constantly tracking quiz results, poll responses, and completion rates in the background, giving you a bird's-eye view of where learners are getting stuck or flying high.

Authoring Tools: This is where the real magic happens. Software like the Articulate Suite or Adobe Captivate lets you build all sorts of engaging check-ins—from simple knowledge checks to complex, branching scenarios that really test a learner’s decision-making skills.

The secret weapon of these tools is their power to give immediate, automated feedback. When a learner gets something wrong, the system can instantly offer a hint, point them to a resource, or serve up a quick explanation. That’s how you reinforce a concept at the precise moment it's needed most.

The next big trend in instructional design is Artificial Intelligence (AI). AI is starting to play a huge role in formative evaluation by going way beyond simple right-or-wrong feedback. It can analyze open-ended answers, spot common themes where learners are getting confused, and even offer personalized guidance and adaptive learning paths on a massive scale.

Imagine an LMS that doesn't just grade a quiz but analyzes why a learner is struggling with a concept and automatically suggests a specific microlearning video to help. This isn't science fiction; it's the direction the industry is heading, making the dream of truly personalized, continuous improvement a practical reality for everyone.

Okay, enough with the theory. The best way to really get a feel for what formative evaluation is all about is to see it in action. These aren't just abstract ideas—they're everyday tools instructional designers use to build courses that actually work.

Let’s look at three completely different scenarios where formative evaluation is the secret sauce. You’ll see how the same core idea can be adapted to fit totally different goals.

Think about that mandatory compliance course everyone has to take. Instead of waiting for a big, scary final exam, this course has short, ungraded mini-quizzes after each microlearning module. The company's LMS keeps an eye on the answers in the background.

If, say, 40% of employees get the same question wrong about a critical new policy, the system flags it. The instructional designer sees this data right away and can jump in. Maybe they add a quick explainer video or a clearer example to that specific module. The problem gets fixed before it turns into a real-world compliance issue.

Now, imagine you’re learning new software using a tool like Adobe Captivate. You aren't just watching a demo; you're actually clicking through tasks in a simulated version of the app. The system gives you instant hints, like "Oops, try clicking the 'Save' button first," guiding you as you go.

You can't even move on to the next lesson until you nail the key tasks in the current one. This hands-on approach builds real skills, not just passive knowledge. Plus, the designers get a goldmine of data showing where users struggle most, which helps them make the software itself more intuitive in the next update.

Finally, let’s picture a leadership program on an LXP. After learning about conflict resolution, new managers are asked to write a quick reflection on a real challenge they’ve faced. Then, they jump into a peer-review session where they give and get feedback on anonymous submissions from their cohort.

This isn't about getting a grade. It's about sparking deep thought and learning from each other. The very act of giving and receiving constructive feedback is a formative experience, building practical skills in a safe space.

In every one of these cases, formative evaluation is what helps you genuinely how to measure training effectiveness by focusing on making the learning better while it's still happening.

Alright, so you’ve got the theory down. But when it comes to actually putting formative evaluation into practice, a few questions always pop up. Let's walk through the most common ones so you can feel confident adding these feedback loops to your next eLearning project.

Think of this as the practical, "how-to" part of the conversation. We're moving from the 'what' to the 'how' to make this whole process feel less like a textbook definition and more like a tool you can actually use.

Honestly, there's no magic number. The right answer is always, "it depends." For a quick microlearning video on a single concept, a simple check-in right at the end is all you need. The feedback is instant, and the loop is tight.

But for a bigger, more complex skill, it makes more sense to build in these checkpoints after each major topic or module. The whole point is to give timely feedback while the material is still fresh in the learner’s mind, not to drown them in constant quizzes.

The rule of thumb here is "just in time," not "all the time." You're looking for logical moments in the learning journey to see if everyone is on track before moving on.

In a word: no. The goal of formative evaluation is all about feedback and improvement, not about slapping a grade on something. When you keep these activities low-stakes (or even ungraded), learners are way more likely to give you an honest try without stressing about how a mistake might impact their final score.

It really shifts the whole dynamic to focus on the process of learning. Sure, your LMS or LXP can track completion to make sure people are participating, but the real gold is in the insights you and the learner get from the interaction itself.

You bet they can. This is where modern eLearning tech really shines and makes your life a whole lot easier. The platforms you're already using are probably built for this.

The trick is to use your tech to deliver feedback that’s both immediate and scalable.

Not quite. It really boils down to your intent and what you do with the answers. Just asking a question is a simple recall check. A formative evaluation, however, uses that question (or activity) to gather specific info that tells you—and the learner—what needs to happen next.

The feedback isn't generic; it's targeted and designed to guide an immediate improvement. You're creating a deliberate loop: check for understanding, give specific feedback, and then adjust the learning path based on the results. It’s a strategic cycle, not just a simple Q&A.

At Relevant Training, we specialize in creating dynamic eLearning that actually engages learners and gets real results for small and medium-sized businesses. If you're ready to build more effective training, let's talk about how we can help.